PointInverter: Point Cloud Reconstruction and Editing via a Generative Model with Shape Priors

Jaeyeon Kim1 Binh-Son Hua3 Duc Thanh Nguyen4 Sai-Kit Yeung1

1Hong Kong University of Science and Technology

2VinAI Research

3VinUniversity

4Deakin University

IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), 2023

Our network

Overview of PointInverter network

Results

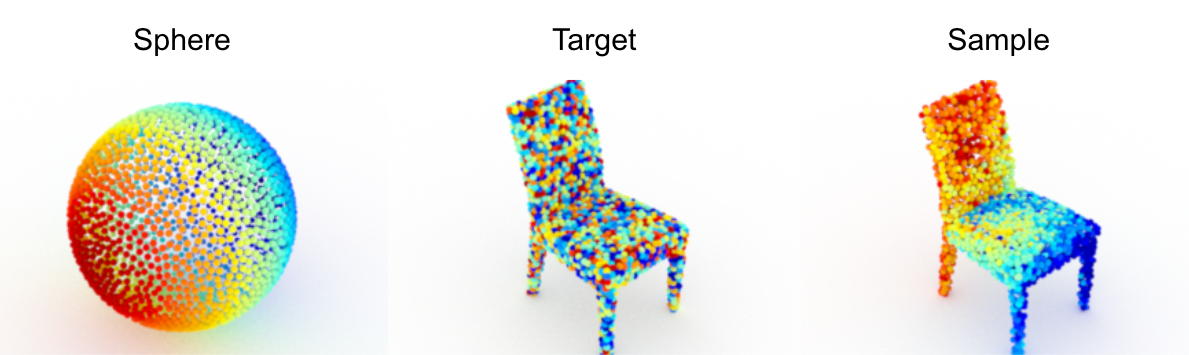

Inversion Reconstruction. The inverted point clouds by pointinverter are very similar to the target input data.

Dense correspondence. The reconstuction sample using our inversion model maintains the dense correspondence with SP-GAN.

Citation

@article{jy-pointinverter-wacv23,

title = {PointInverter: Point Cloud Reconstruction and Editing via a Generative Model with Shape Priors},

author = {Jaeyeon Kim and Binh-Son Hua and Duc Thanh Nguyen and Sai-Kit Yeung},

booktitle = {IEEE/CVF Winter Conference on Applications of Computer Vision (WACV)},

year = {2023}

}This research project is partially supported by an internal grant from HKUST (R9429).